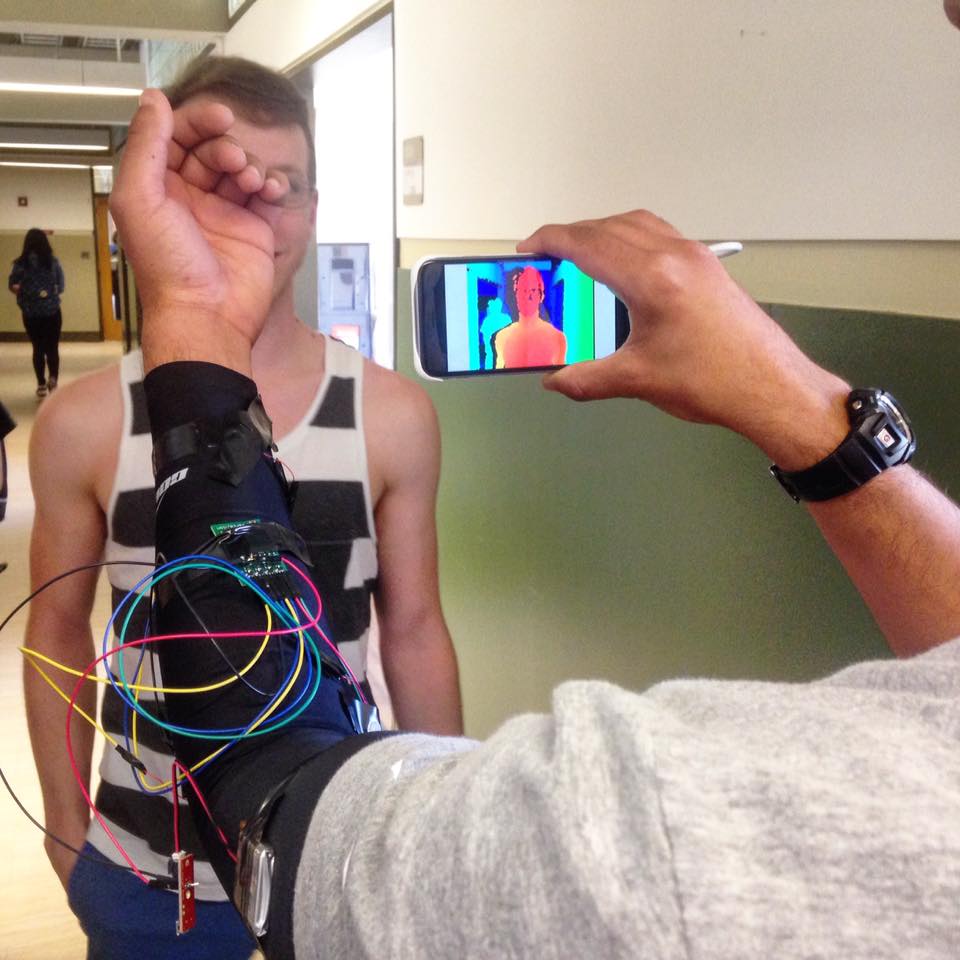

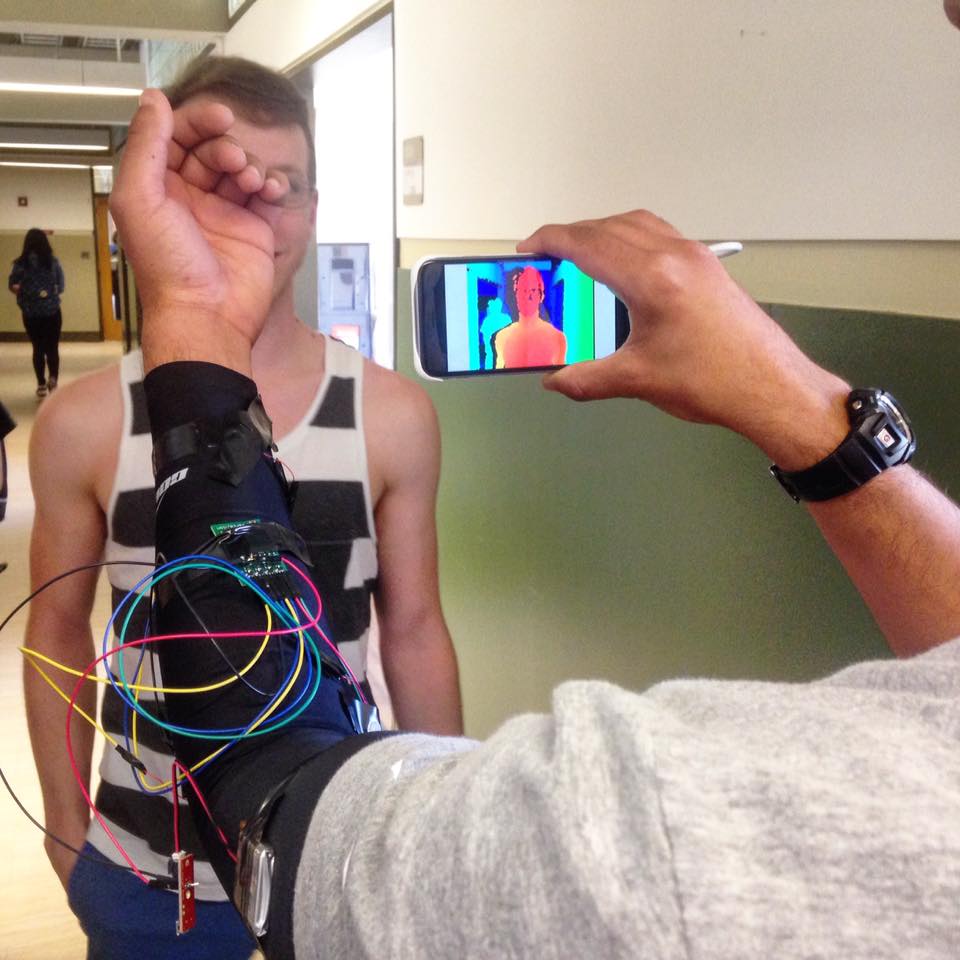

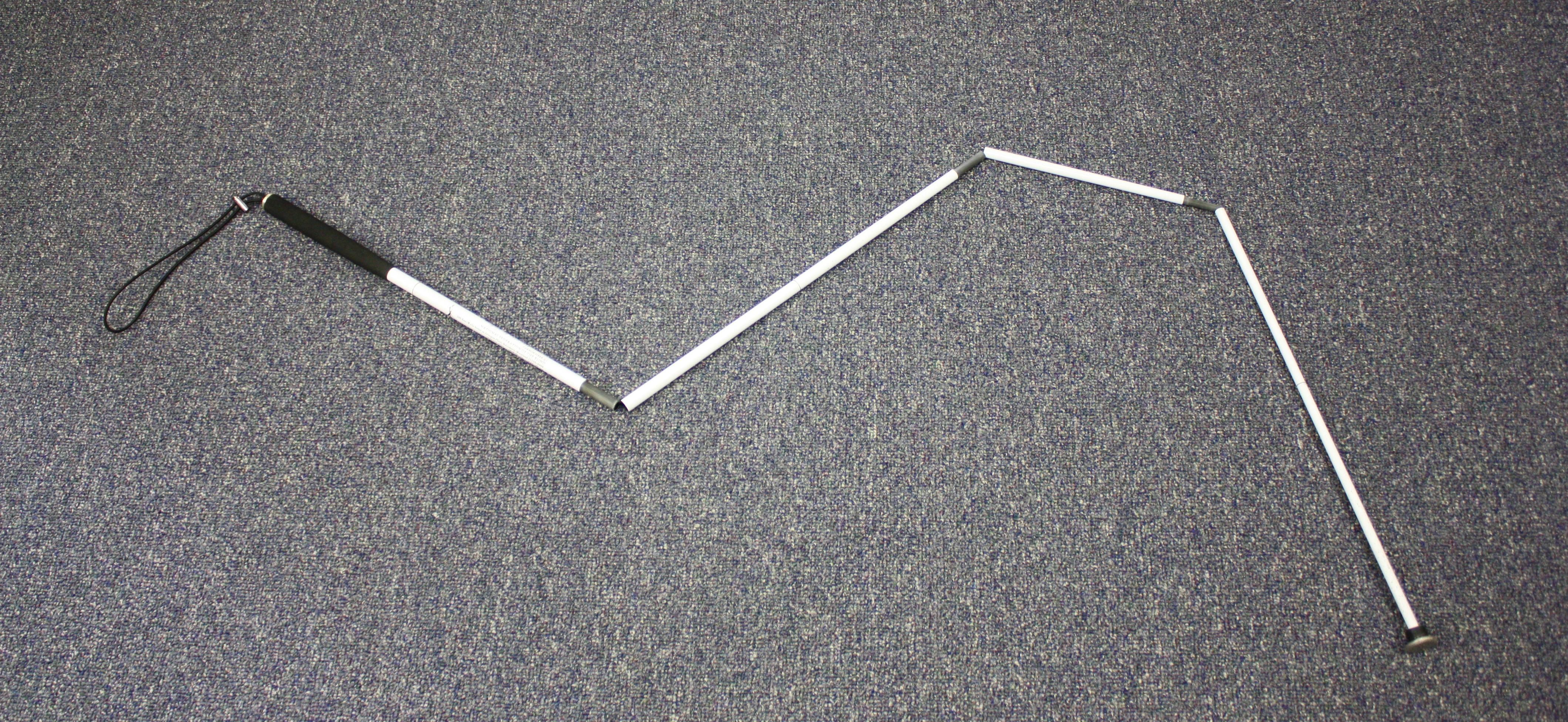

Perception is a passive obstacle detection device for visually impaired people. Currently, visually impaired people primarily use a white cane to actively search for and avoid obstacles in their path. The downfalls of the current approach to obstacle detection include missing obstacles if the white cane doesn’t make contact with the obstacle and completely missing obstacles that are not connected to the ground (i.e. overhanging ceilings, open cabinets, etc.). Perception aims to allow the visually impaired to detect a wider range of obstacles as well as provide more accurate information about their surroundings without forcing them to actively seek out obstacles in their environment. It does this by using the Structure depth sensing camera connected to an iPhone or iPad.

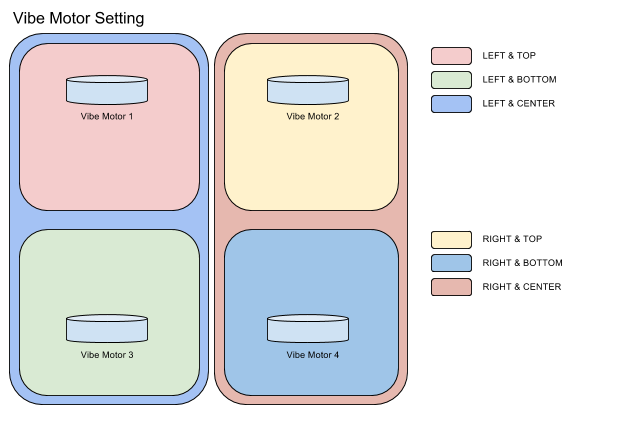

Another main goal is to make Perception as easy to use as possible. To aid in this goal, Perception will give haptic and audio feedback to alert the user of possible obstacles surrounding them. This feedback will be intuitive to understand and not interfere with the senses that the visually impaired have come to rely on. In addition, Perception capitalizes on the fact that a large number of visually impaired people already have access to an iPhone or an iPad; few additional parts are needed for users to start using Perception.