Introduction

Concept

We want to create an input control system that speak with the machine world, and we call it ezpze. In terms of functionality, ezpze is very similar to a trackpad. However, conventionally, a trackpad has fixed dimensions and is either attached to your machine or cannot be transported easily. We want to create a trackpad that is easily scalable in size, has high portability and operates at low power.

Design Requirements

Low Power

We want this device to be portable and to have its own rechargeable power source for optimum user experience. In order to achieve this we need to operate each component at relatively low power.

Position Triangulation

This is a necessity for the device to be usable in a real system. Generally speaking, most modern devices has thousands of pixels on the screen, so we need to achieve a certain level of granularity in order for it to operate in a useful manner.

Wireless Communication

Due to the push in the usability of this device, one very highly desired requirement is for each of the components to communicate wirelessly with each other, and for the device to communicate wirelessly with the controlling system.

Human Interaction

This device must be able to take in user input as a form of touch on a surface and convert the signals into a language that can be understood by existing systems for control. The assessment of this can be seen by connecting this to an actual existing system (e.g. a cursor) and controlling it.

Architecture

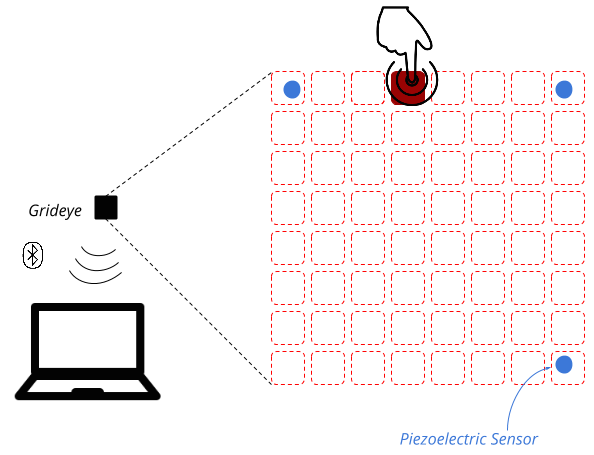

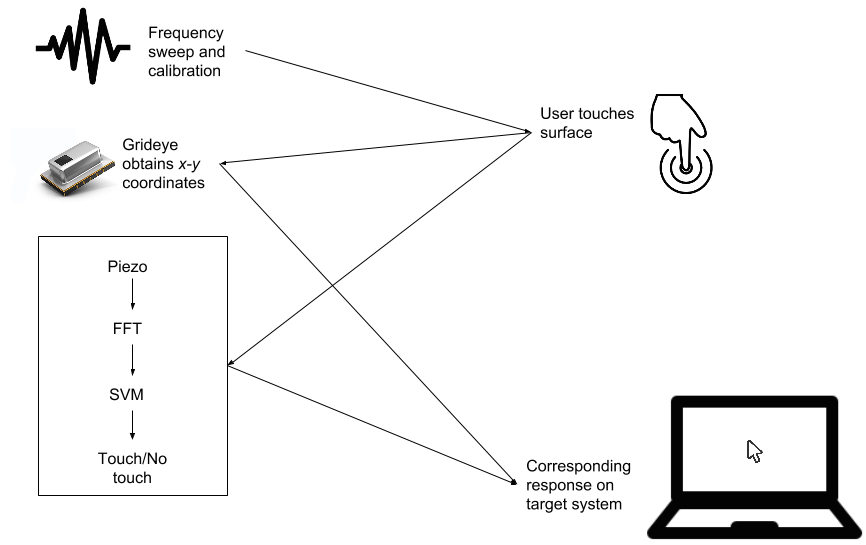

Sensing touch is a two-fold process. First, the system must triangulate the location of the finger. Second, the system verify the nature of the touch or interaction with the surface. We achieve these by using the Grideye and three piezoelectric sensors.

The system architecture is outlined in the figure below. The nodes represent the combination of sensors that identify interaction with the Touch Surface. The Slave Nodes simply send their readings to the Master Node. The Master Node, combines these readings, along with its own readings to calculate the location of the user touch input. Following this, it sends the result to the target system, represented by the laptop.

User Interaction

- The system sweeps from 20kHz to 40kHz and calibrates its SVM classifier based on the response.

- The system then scans every 50ms and determines whether it is being touched or not.

- Meanwhile, the Grideye determines the x-y coordinates of the finger from the heat signature.

- Based on the combined result of the piezoelectric sensors and the Grideye, the cursor on the laptop is controlled.

Competitive Analysis

Touch & Activate

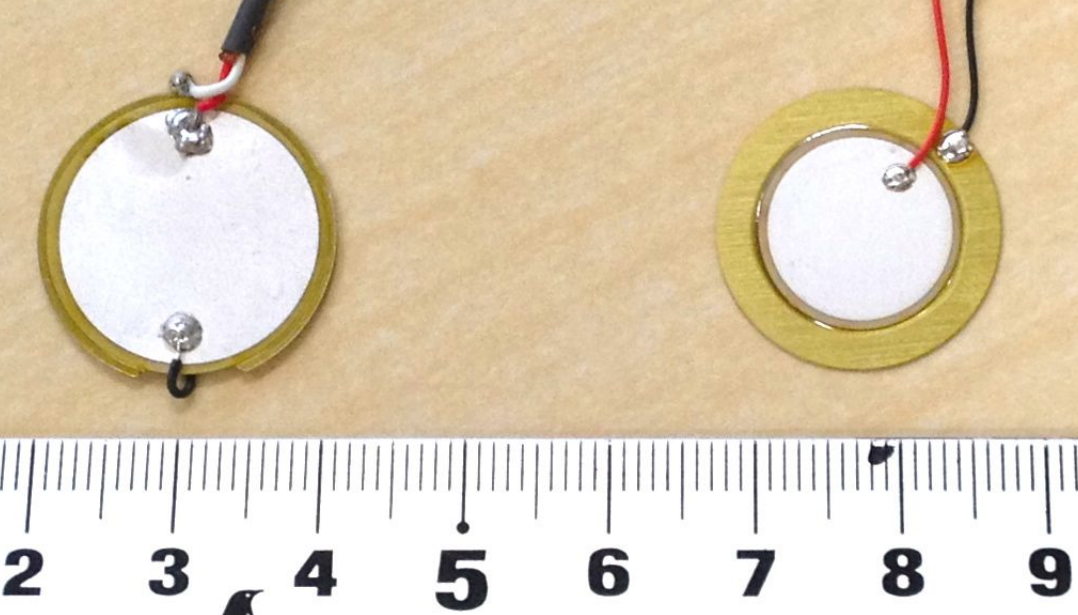

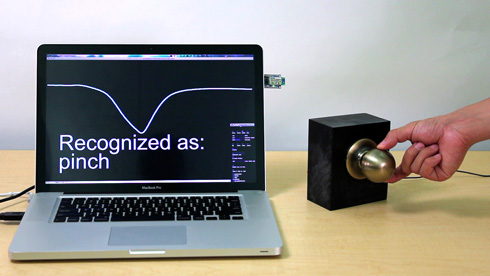

Touch & Activate is a novel touch sensing technology published by researchers from the University of Tsukuba in Japan for UIST’13. Their technology centers around the concept of acoustic sensing, taking advantage of the fact that solid objects have different resonant profiles when interfered with a human touching it.

Touché

Touché is built by Disney Research and uses Swept Frequency Capacitive Sensing. Instead of acoustic sensing, it uses the idea of reading capacitive sensing when humans touch objects. However, unlike the conventional binary capacitive sensing, it creates a range of response values based by sweeping across a range of frequencies.

Timeline

- Feb 14: Finished design proposal.

- Feb 20: Began experimenting with variety of piezoelectric sensors.

- March 3: Obtained a shift in resonance frequency upon taps on controlled surface.

- March 20: Used Grideye to display 8-by-8 array of heat signatures in browser. See github.

- April 16: Obtained a shift in resonance frequency upon contact with controlled surface.

- April 25: Used an SVM classifier to learn shifts in resonance frequency.

Team

Mihir Pandya

Chief Emailing Officer

System Architect

Mirai Akagawa

"Problem Solva"

Machine Learner

Elena Feldman

Chief Ordering Officer

Hardware Expert

Lucy Qian

Chief Demo Officer

Profession Solder-er